Computer science seldom deals with Euclidean data. DNA involves sequences of characters on a 4-letter alphabet, proteins and chemical compounds have graph-like structure. Data can also live on graphs (e.g. individuals as nodes of a social network graph, functional data associated to mesh nodes). Data is complex with non-linear patterns of variability, but it is also highly structured. It is crucial to leverage and learn this structure, whether to do robust inference from few examples or to best make use of large datasets. Sometimes, structure can also be problem specific, and takes the form of ‘quasi-invariances’ that we wish to enforce in the machine/deep/statistical learning algorithm. This is a growing interest of mine, and something that I have explored in the context of shape analysis.

Statistical shape analysis involves statistics in non Euclidean spaces. Indeed the pose of an object (translation, orientation) is irrelevant to its shape, and sometimes its scale is also irrelevant to the analysis. Taking pose and/or scale out of the equation without biasing the analysis is challenging, since for all practical purposes we expect data to be somewhat corrupted/noisy. For instance, if objects have been delineated as surface/volumetric meshes from images, or have been reconstructed from point clouds, the resulting mesh representation is only faithful to the true geometry up to some degree of accuracy. In fact, robustness to small random variations in the mesh definition is generally an appealing property for shape analysis algorithms.

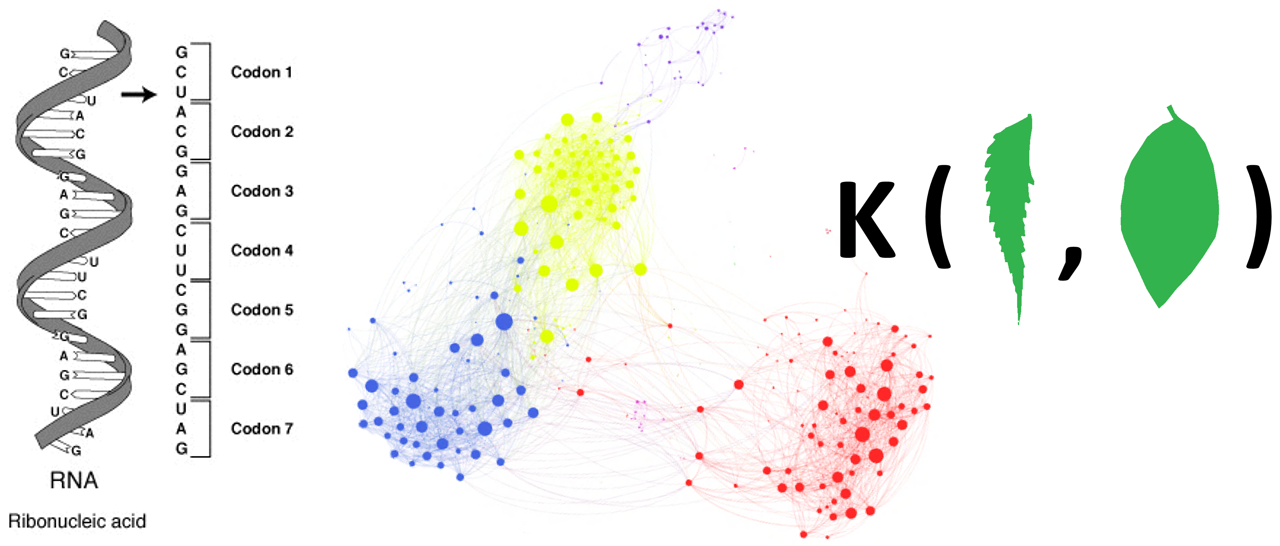

Here and in these slides, the problem is approached from the standpoint of spectral shape analysis and kernel methods. Kernel methods allow to exchange non linear statistics on complex data types for linear statistics on a functional representation of data points. The kernel representation captures the notion of ‘proximity’ / ‘similarity’ / ‘alignment’ that we want to enforce. In the proposed approach we build ‘spectral shape kernels’ using geometrical invariants derived from spectral analysis. The spectral shape kernels enforce by design the invariance to pose (and scale if desired), and can be tuned to put more emphasis on global shape properties or finer local details, depending on the task and the amount of noise in the data. These ideas can be applied in various settings, including supervised classification, unsupervised clustering and probabilistic analysis of shapes.