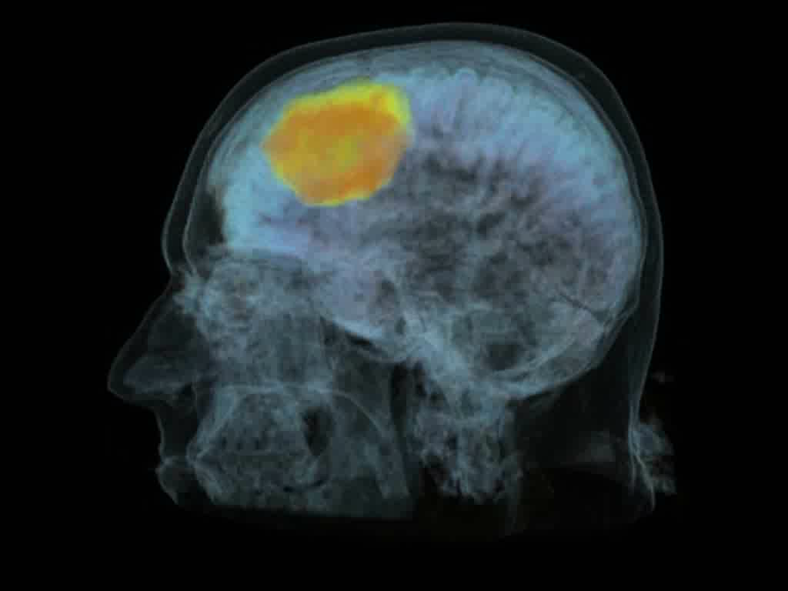

I am currently a visiting postdoctoral fellow at Microsoft Research Cambridge, thanks to the MSR-Inria Joint Centre. I am a member of the InnerEye team, a research project focused on quantitative medical image analysis. I was previously with the Asclepios team at Inria.

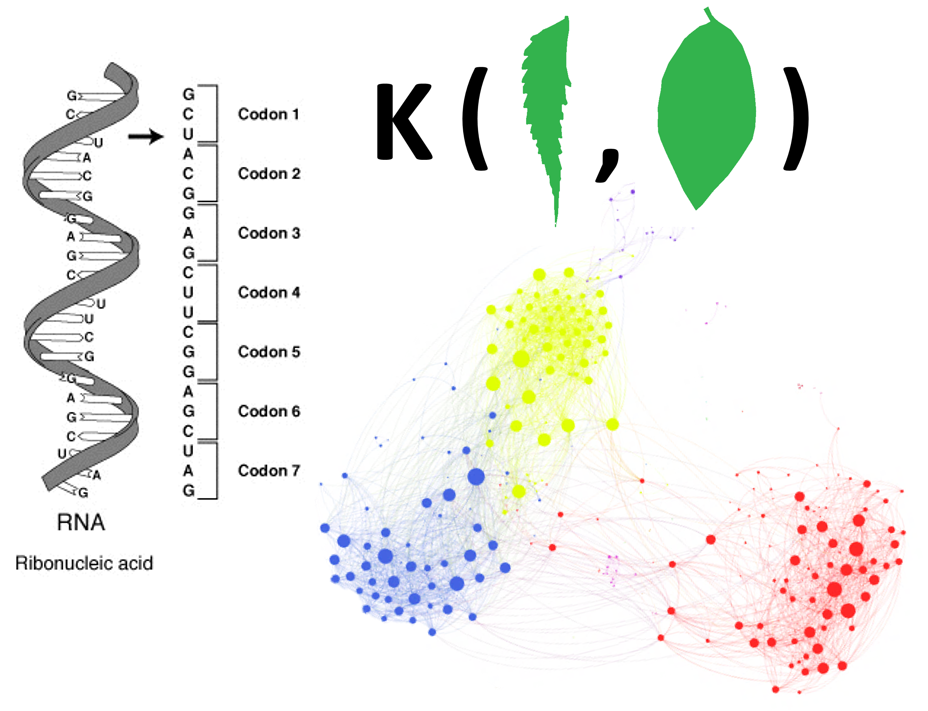

I am interested in data analysis, including machine learning, signal processing and information geometry – all that involves algorithms to automatically understand & leverage structure in complex data.

I’m always keen to hear about other fields of applied maths, ML & beyond: vision, computational biology & physics, agent-based models, language processing, etc.

Ph.D., ML for Medical Imaging, 2016

Inria / University Nice Sophia Antipolis

M.Sc. in Maths, Vision, Learning, 2012

ENS Cachan, MVA program

Diplôme d'Ingénieur, Department of Applied Mathematics, 2012

Ecole Centrale Paris

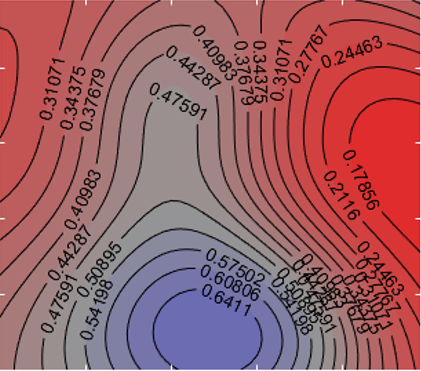

Non-rigid registration schemes supplement incomplete observations (image data) with prior assumptions (e.g. plausible object deformations, image formation). They operate under data noise, model biases and uncertainties. The ability to quantify those uncertainties and to analyze the soundness of model assumptions is very appealing, especially if registration is part of a larger pipeline. The project aims at a fully Bayesian treatment of registration, as a natural framework to address these questions.

Decision Forests have been very successful in the space of image segmentation, as a simple, fast and extremely flexible approach well suited to CPU architectures. I developed a framework called BONSAI that cascades small decision forests to allow for semantic reasoning in a more natural manner, for the purpose of structured classification tasks. It also exposes and exploits hidden semantics within decision forests. This is used to implement a form of guided bagging, significantly increasing precision and recall.

Computer science seldom deals with Euclidean data. Data is complex and varies in non linear patterns, but it is also highly structured. It is crucial to leverage and learn this structure, whether to do robust inference from few examples (or one example?) or to best make use of large datasets. Sometimes, structure can also be problem specific, and takes the form of ‘quasi-invariances’ that we wish to enforce in the machine learning. This is a growing interest of mine, and something that I have explored in the context of shape analysis.

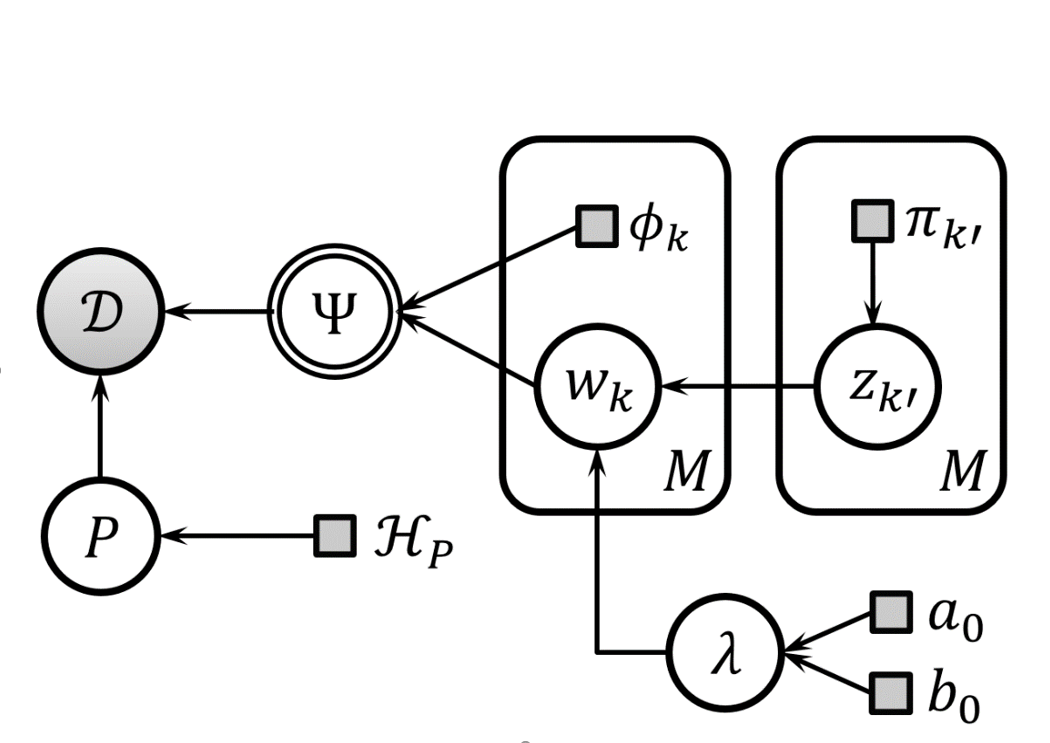

Sparse priors have many uses and motivations – from robust regularization, to reducing the computational complexity of algorithms, to model selection & interpretable machine learning. Sometimes we want to combine the benefits of sparse learning with structured priors that encode a notion of ‘smoothness’ for the problem at hand. This project is about efficient inference schemes for structured sparse learning, in particular with Bayesian priors.